On This Page:

The term reliability in psychological research refers to the consistency of a quantitative research study or measuring test. For instruments like questionnaires, reliability ensures that responses are consistent across times and occasions. Multiple forms of reliability exist, including test-retest, inter-rater, and internal consistency.

For example, if a person weighs themselves during the day, they would expect to see a similar reading. Scales that measured weight differently each time would be of little use.

The same analogy could be applied to a tape measure that measures inches differently each time it is used. It would not be considered reliable.

If findings from research are replicated consistently, they are reliable. A correlation coefficient can be used to assess the degree of reliability. If a test is reliable, it should show a high positive correlation.

Of course, it is unlikely the same results will be obtained each time as participants and situations vary. Still, a strong positive correlation between the same test results indicates reliability.

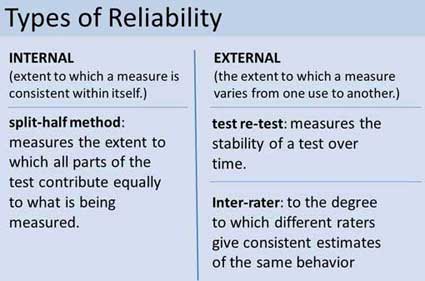

There are two types of reliability: internal and external.

- Internal reliability refers to how consistently different items within a single test measure the same concept or construct. It ensures that a test is stable across its components.

- External reliability measures how consistently a test produces similar results over repeated administrations or under different conditions. It ensures that a test is stable over time and situations.

Split-Half Method

The split-half method assesses the internal consistency of a test, such as psychometric tests and questionnaires.

There, it measures the extent to which all parts of the test contribute equally to what is being measured.

Here’s how it works:

- A test or questionnaire is split into two halves, typically by separating even-numbered items from odd-numbered items, or half half items vs second half.

- Each half is scored separately, and the scores are correlated using a statistical method, often Pearson’s correlation.

- The correlation between the two halves gives an indication of the test’s reliability. A higher correlation suggests better reliability.

- To adjust for the test’s shortened length (because we’ve split it in half), the Spearman-Brown prophecy formula is often applied to estimate the reliability of the full test based on the split-half reliability.

The reliability of a test could be improved by using this method. For example, any items on separate halves of a test with a low correlation (e.g., r = .25) should either be removed or rewritten.

The split-half method is a quick and easy way to establish reliability. However, it can only be effective with large questionnaires in which all questions measure the same construct. This means it would not be appropriate for tests that measure different constructs.

For example, the Minnesota Multiphasic Personality Inventory has sub scales measuring differently behaviors such as depression, schizophrenia, social introversion. Therefore the split-half method was not be an appropriate method to assess reliability for this personality test.

Test-Retest

The test-retest method assesses the external consistency of a test. Examples of appropriate tests include questionnaires and psychometric tests. It measures the stability of a test over time.

A typical assessment would involve giving participants the same test on two separate occasions. If the same or similar results are obtained, then external reliability is established.

Here’s how it works:

- A test or measurement is administered to participants at one point in time.

- After a certain period, the same test is administered again to the same participants without any intervention or treatment in between.

- The scores from the two administrations are then correlated using a statistical method, often Pearson’s correlation.

- A high correlation between the scores from the two test administrations indicates good test-retest reliability, suggesting the test yields consistent results over time.

This method is especially useful for tests that measure stable traits or characteristics that aren’t expected to change over short periods. The disadvantage of the test-retest method is that it takes a long time for results to be obtained. The reliability can be influenced by the time interval between tests and any events that might affect participants’ responses during this interval.

Beck et al. (1996) studied the responses of 26 outpatients on two separate therapy sessions one week apart, they found a correlation of .93 therefore demonstrating high test-restest reliability of the depression inventory.

This is an example of why reliability in psychological research is necessary, if it wasn’t for the reliability of such tests some individuals may not be successfully diagnosed with disorders such as depression and consequently will not be given appropriate therapy.

The timing of the test is important; if the duration is too brief, then participants may recall information from the first test, which could bias the results.

Alternatively, if the duration is too long, it is feasible that the participants could have changed in some important way which could also bias the results.

The test-retest method assesses the external consistency of a test. This refers to the degree to which different raters give consistent estimates of the same behavior. Inter-rater reliability can be used for interviews.

Inter-Rater Reliability

Inter-rater reliability, often termed inter-observer reliability, refers to the extent to which different raters or evaluators agree in assessing a particular phenomenon, behavior, or characteristic. It’s a measure of consistency and agreement between individuals scoring or evaluating the same items or behaviors.

High inter-rater reliability indicates that the findings or measurements are consistent across different raters, suggesting the results are not due to random chance or subjective biases of individual raters.

Statistical measures, such as Cohen’s Kappa or the Intraclass Correlation Coefficient (ICC), are often employed to quantify the level of agreement between raters, helping to ensure that findings are objective and reproducible.

Ensuring high inter-rater reliability is essential, especially in studies involving subjective judgment or observations, as it provides confidence that the findings are replicable and not heavily influenced by individual rater biases.

Note it can also be called inter-observer reliability when referring to observational research. Here, researchers observe the same behavior independently (to avoid bias) and compare their data. If the data is similar, then it is reliable.

Where observer scores do not significantly correlate, then reliability can be improved by:

- Training observers in the observation techniques and ensuring everyone agrees with them.

- Ensuring behavior categories have been operationalized. This means that they have been objectively defined.

For example, if two researchers are observing ‘aggressive behavior’ of children at nursery they would both have their own subjective opinion regarding what aggression comprises.

In this scenario, they would be unlikely to record aggressive behavior the same, and the data would be unreliable.

However, if they were to operationalize the behavior category of aggression, this would be more objective and make it easier to identify when a specific behavior occurs.

For example, while “aggressive behavior” is subjective and not operationalized, “pushing” is objective and operationalized. Thus, researchers could count how many times children push each other over a certain duration of time.

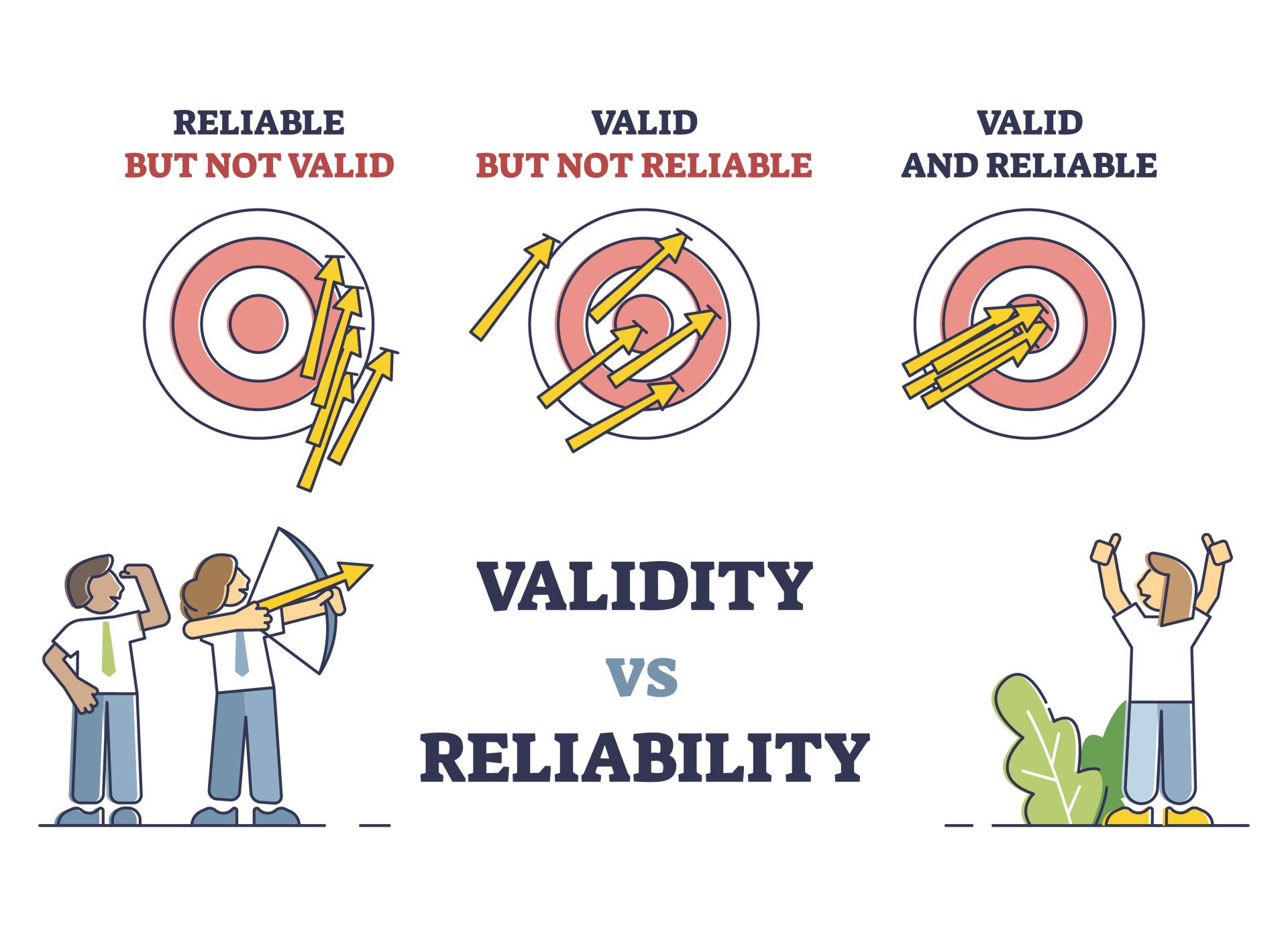

Validity vs. Reliability In Psychology

In psychology, validity and reliability are fundamental concepts that assess the quality of measurements.

- Validity pertains to the accuracy of an instrument. It addresses whether the test measures what it claims to measure. Different forms include content, criterion, construct, and external validity.

- Reliability concerns the consistency of an instrument. It evaluates whether the test yields stable and repeatable results under consistent conditions. Types of reliability include test-retest, inter-rater, and internal consistency.

A pivotal understanding is that reliability is a necessary but not sufficient condition for validity.

It means a test can be reliable, consistently producing the same results, without being valid, or accurately measuring the intended attribute.

However, a valid test, one that truly measures what it purports to, must be reliable. In the pursuit of rigorous psychological research, both validity and reliability are indispensable.

References

Beck, A. T., Steer, R. A., & Brown, G. K. (1996). Manual for the beck depression inventory The Psychological Corporation. San Antonio, TX.

Hathaway, S. R., & McKinley, J. C. (1943). Manual for the Minnesota Multiphasic Personality Inventory. New York: Psychological Corporation.